Are you looking for a solution on how to check if file exists in the azure data factory (ADF) pipeline, then you have reached the right place. In this blog, I will take you through a step-by-step approach with the practical example demo of calling if activity that can be used inside the azure data factory pipeline. Azure data factory is one of the most popular services of the Azure cloud platform for performing the data migration from on-premises data center to the Azure cloud. You may be looking to check if a specific file exists in Azure blob storage or Azure data lake storage at a specific folder path to perform some transformation or scripting work based on your business need. Whatever will be the reason for doing so, this article will help you to guide in checking the file existence and a few other use cases associated with it. Let’s dive into it.

You can check if file exist in Azure Data factory by using these two steps

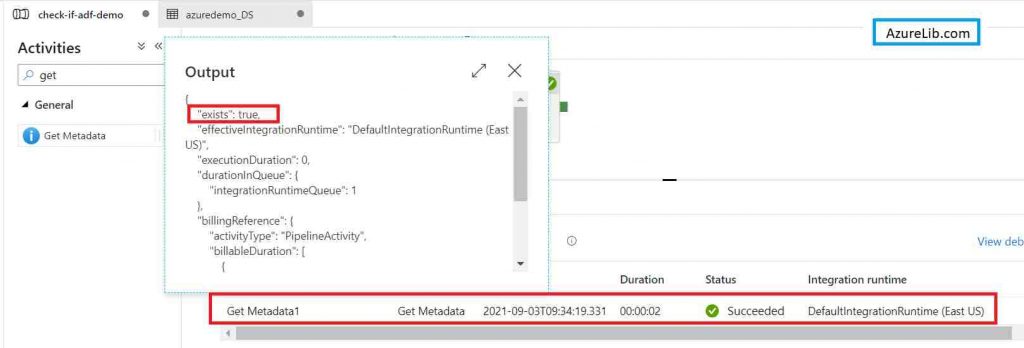

1. Use GetMetaData Activity with a property named ‘exists’ this will return true or false.

2. Use the if Activity to take decisions based on the result of GetMetaData Activity.

Steps to check if file exists in Azure Blob Storage using Azure Data Factory

Prerequisite:

You should have an active Azure subscription.

You should have contributor access to the Azure Data Factory account.

Implementation:

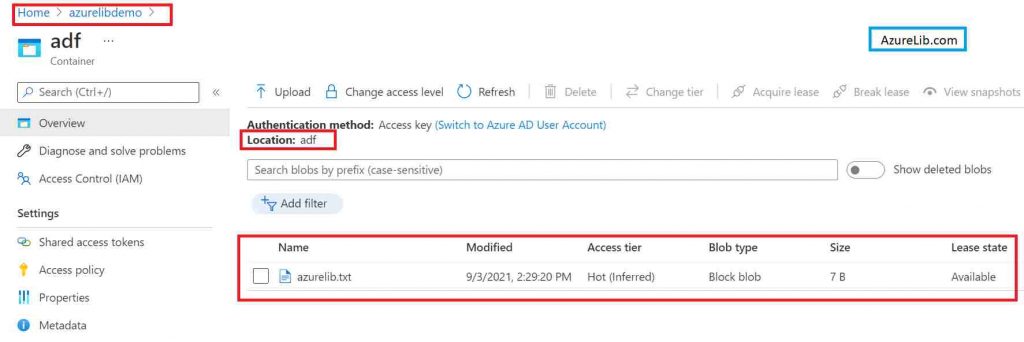

- Let’s first go to the Azure Blob storage and put a file dummy file for example azurelib.txt in the location where you want to check if file exists. If you already have some files there you can use any of those files as well.

- Now let’s say using the Azure Data Factory we will check whether this file exist or not in the Azure blob storage location. Ideally, if everything goes fine it should say it exists.

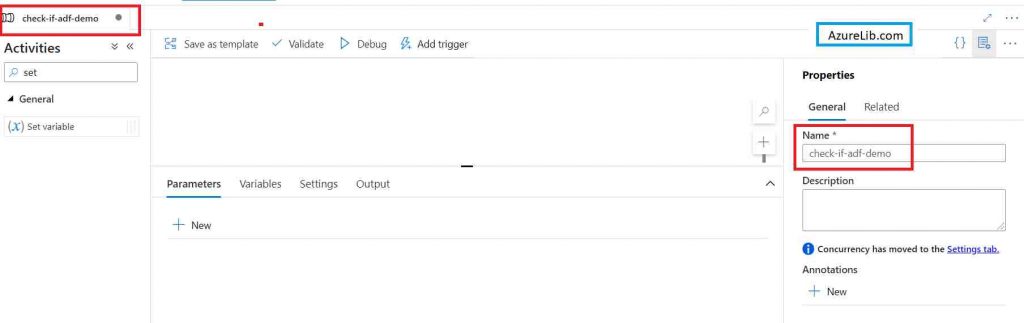

- Go to Azure Data factory account and create a sample pipeline name ‘check-if-adf-demo‘. If you already have any pipeline you can use it that as well.

- Let’s create a linked service that will be of type Azure blob storage. If you already have existing azure blob storage linked service you can reuse the same, in case you haven’t one, then please create one (Here I am assuming that you know how to create the linked service in Azure data factory. If you need any help in creating please follow this link How to create Linked service in Azure data factory ).

- Create an Azure blob storage dataset in the Azure Data Factory which will be pointing to the folder path of your desired file. You can either use the hard-coded file path or use the dynamic one using the dataset parameter. Let’s create a linked service that will be of type Azure blob storage. If you already have existing azure blob storage linked service you can reuse the same, in case you haven’t one, then please create one (Here again I am assuming that you know how to create the dataset in the Azure data factory. If you need any help in creating please follow this link (What is dataset in azure data factory ).

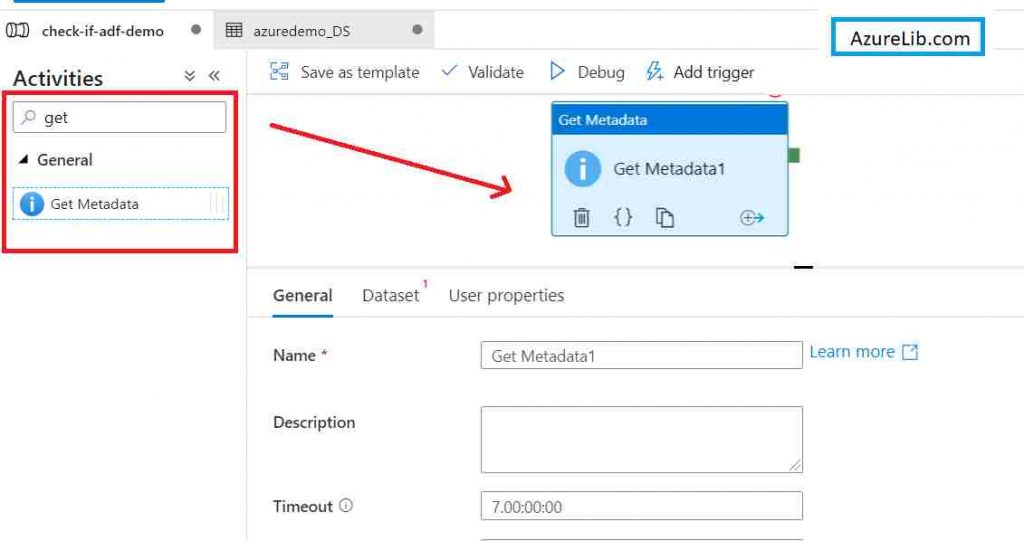

- Search Get Meta Data activity in the activity search box. Drag and drop the get meta data activity in the pipeline designer.

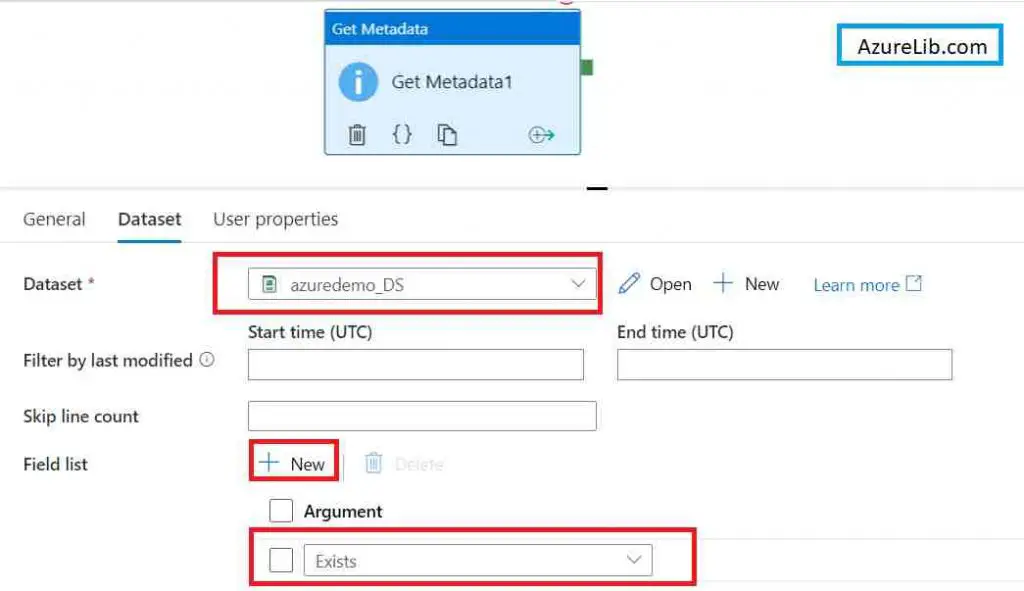

- Configure the GetMetaData activity. In the dataset tab of the activity select the blob storage dataset which we have created in the above step.

- In the Field List Attribute click on New, it will create one text box with dropdown. These will be the various metadata properties associated with the files and the folders. From the drop-down select the ‘Exists’ property. This exists property will return in true or false bases on the file exists or not.

- Let’s run the pipeline by click on the debug. Once the pipeline is executed successfully open the GetMetaData activity output. There you will see the ‘Exists’ property in the JSON which will provide you the result of whether the file exists or not in the given folder path location.

Now you can use the output as an input to the IF activity in the Azure Data factory to take next step bases on file exists or not. Code for that would be like:

@activity('Replace_Get_Metadata_Activity_Name_Here').output.existsSteps to check if file exists in Azure Data Lake Storage (ADLS) using Azure Data Factory

Prerequisite:

You should have an active Azure subscription.

You should have contributor access to the Azure Data Factory account.

Implementation:

- Let’s first go to the Azure Data lake storage and put a file dummy file for example azurelib.txt in the location where you want to check if file exists. If you already have some files there you can use any of those file as well.

My File Path () :

- Now let’s say using the Azure Data Factory we will check whether this file exist or not in the azure data lake storage location. Ideally, if everything goes fine it should say it exists.

- Go to Azure Data factory account and create a sample pipeline name ‘check-if-adf-demo‘. If you already have any pipeline you can use it that as well.

- Let’s create a linked service that will be of type Azure data lake storage. If you already have existing azure blob storage linked service you can reuse the same, in case you haven’t one, then please create one (Here I am assuming that you know how to create the linked service in the azure data factory. If you need any help in creating please follow this link (How to create Linked service in Azure data factory ).

- Create an Azure Data Lake storage dataset in the Azure Data Factory which will be pointing to the folder path of your desired file. You can either use the hard-coded file path or use the dynamic one using the dataset parameter. Let’s create a linked service that will be of type Azure data lake storage. If you already have an existing azure data lake storage linked service you can reuse the same, in case you haven’t one, then please create one (Here again I am assuming that you know how to create the dataset in the azure data factory. If you need any help in creating please follow this link( What is dataset in azure data factory ).

- Search Get Meta Data activity in the activity search box. Drag and drop the get metadata activity in the pipeline designer.

- Configure the GetMetaData activity. In the dataset tab of the activity select the blob storage dataset which we have created in the above step.

- In the Field List Attribute click on New it will create one text box with dropdown. These will be the various metadata properties associated with the files and the folders. From the drop-down select the ‘Exists’ property. This exists property will return in true or false bases on the file exists or not.

- Let’s run the pipeline by click on the debug. Once the pipeline is executed successfully open the GetMetaData activity output. There you will see the ‘Exists’ property in the JSON which will provide you the result of whether the file exists or not in the given folder path location.

Recommendations

Most of the Azure Data engineer finds it little difficult to understand the real world scenarios from the Azure Data engineer’s perspective and faces challenges in designing the complete Enterprise solution for it. Hence I would recommend you to go through these links to have some better understanding of the Azure Data factory.

Azure Data Engineer Real World scenarios

Azure Databricks Spark Tutorial for beginner to advance level

Latest Azure DevOps Interview Questions and Answers

You can also checkout and pinned this great Youtube channel for learning Azure Free by industry experts

You can also visit the custom activity in the Azure Data Factory here How to run python script in Azure Data Factory

In case if you are looking for Microsoft Docs for the Azure Data factory you can find it here: Link

Final Thoughts

By this, we have reached the last section of the article. In this article, we have learned how we can check if a file exists in the Azure Blob storage or Azure Data lake Storage from the Azure data factory. Hope you have found this article insightful and learned the new concept of GetMetaData activity in the Azure data factory.

Please share your comments suggestions and feedbacks in the comment section below.