Azure data factory is used for ETL , shift and load purpose. It is one of the most popular service in the Azure space. In this blog I will try to show you step by step guide to create your first Azure data factory account. Let’s begin creating our Azure data factory account. Only prerequisite for this is you need to have a azure account with subscription.

Let’s go to azure portal

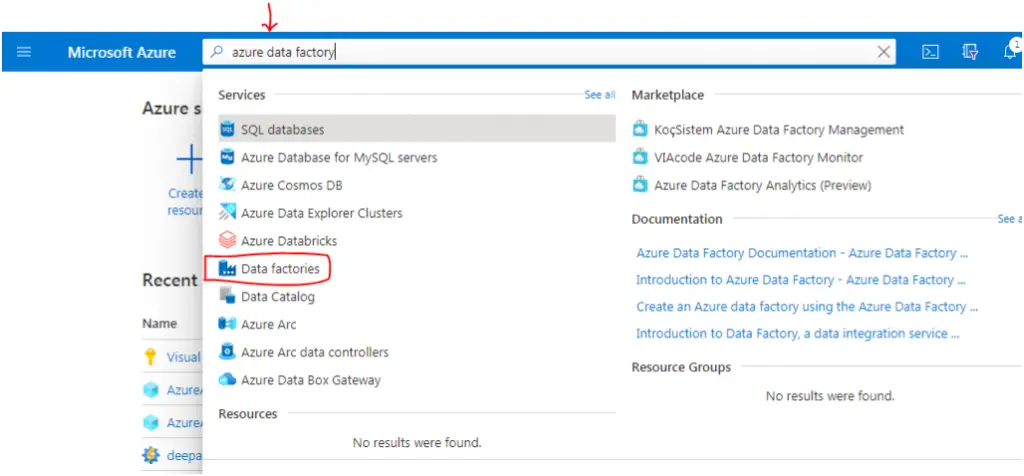

Now in the search box type azure data factory in the drop down you can see data factory just click on it

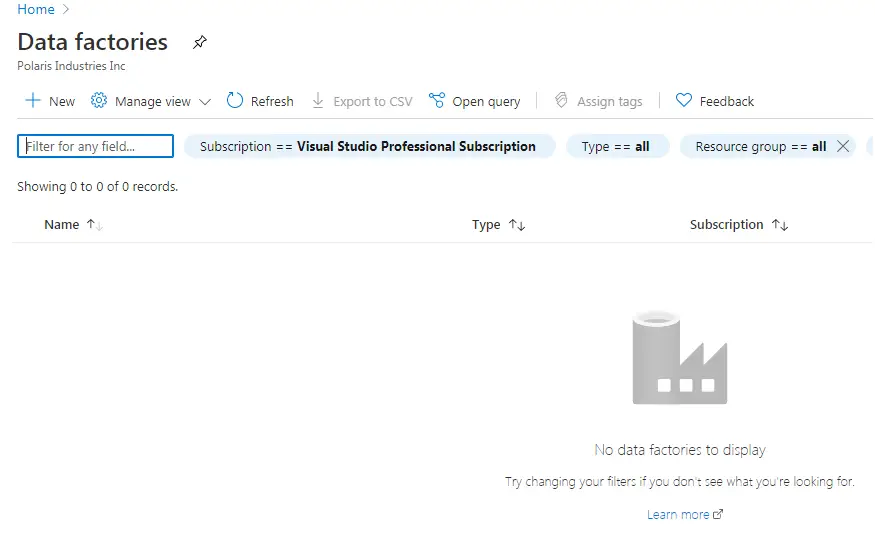

You can see that there is no did a factory account available click on the plus sign to create a new data factory account

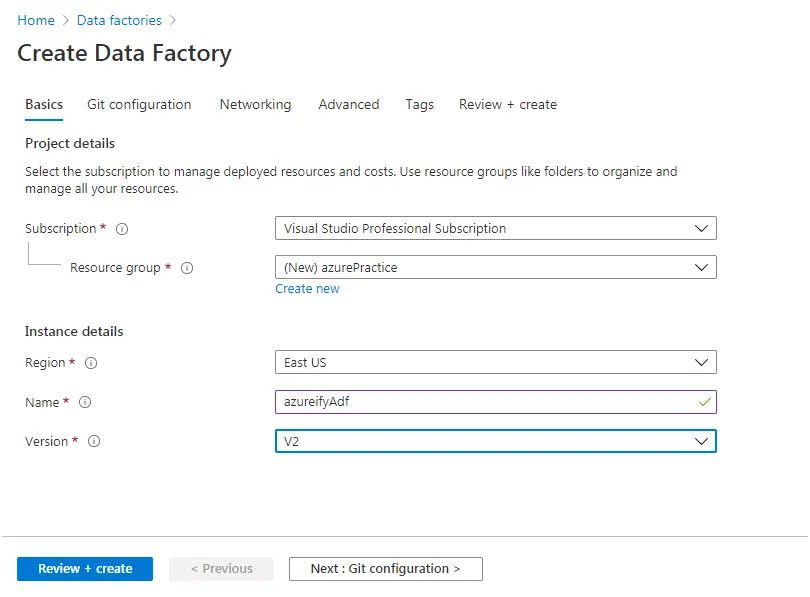

For creating data factory account let’s fill up all these information. First enter your subscription then select resource group .If you already have an existing resource group you can select it from the drop down or you can create a new resource group in which you want your adf account to be created. Next we have to give the name of the adf account this needs to be unique across the globe. Select the version as V2 and click Next

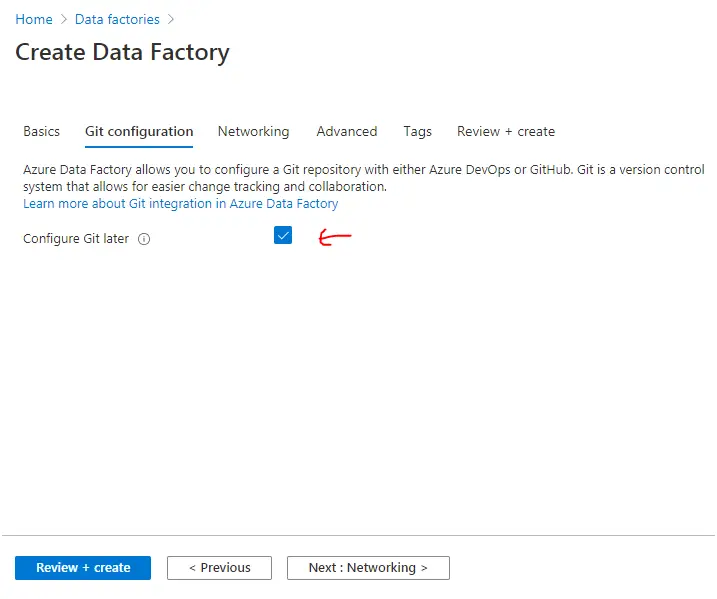

Click on configure Git later checkbox and move to next (Git is required for devops purpose which I will cover in separate blog.)

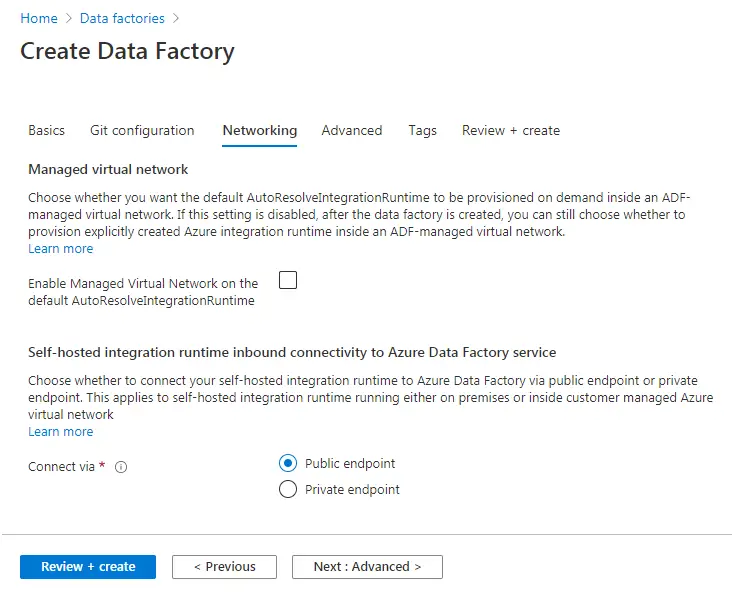

Make no changes yet keep as it is and click on next

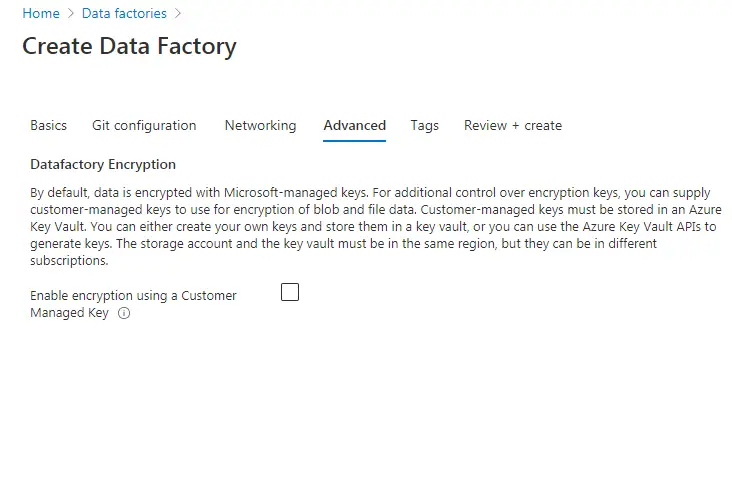

Here also do nothing just click next

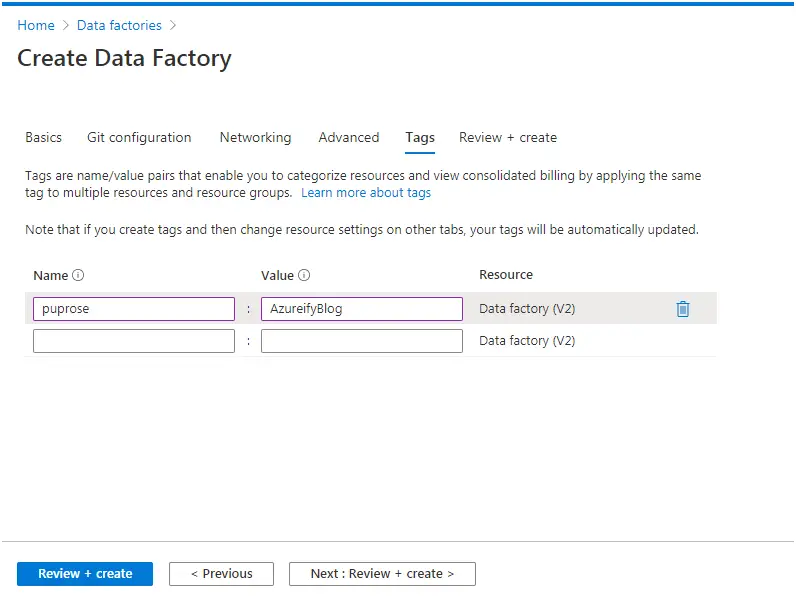

You can add tags hear which is optional if you want to skip you can simply click on next

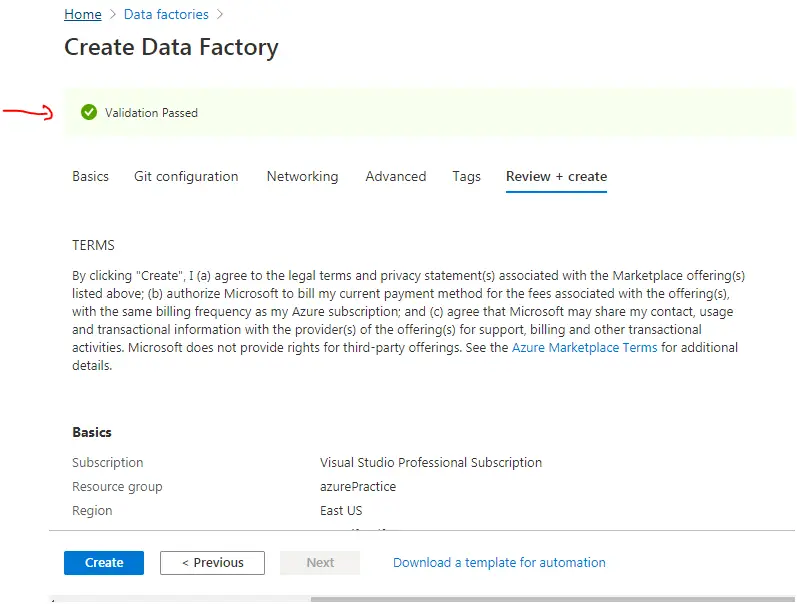

When do you see validation pass you can click on create and your data factory account will be created

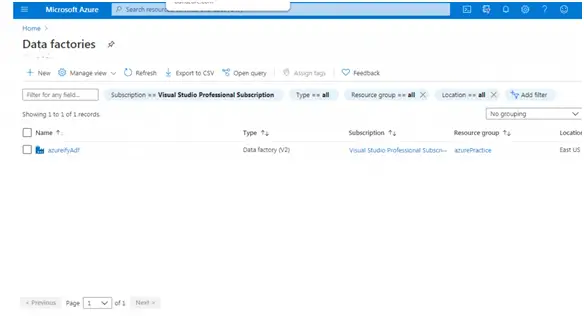

When you go back again to data factory you can see your newly create azure data factory account just click on it and it will take you to adf account

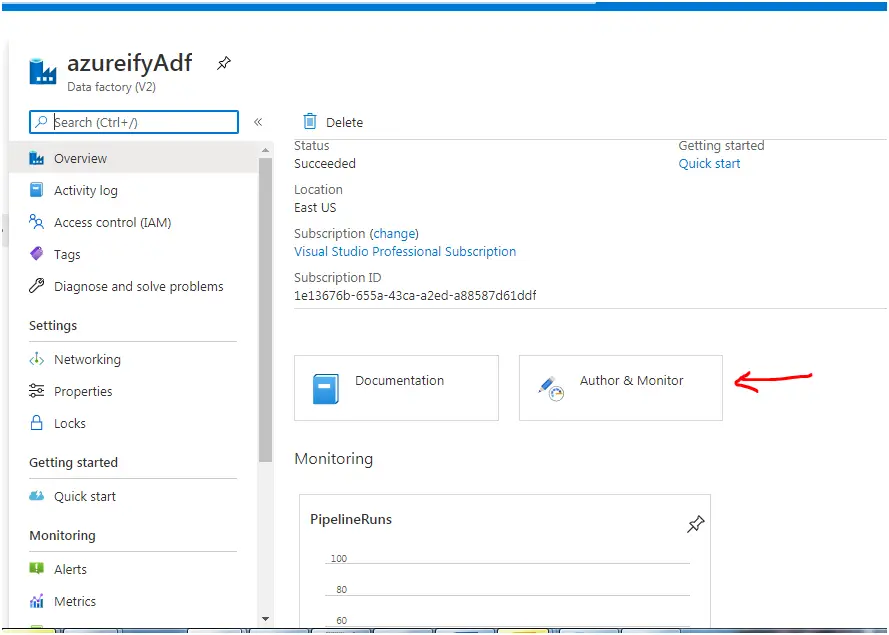

Click on Author and monitor tab

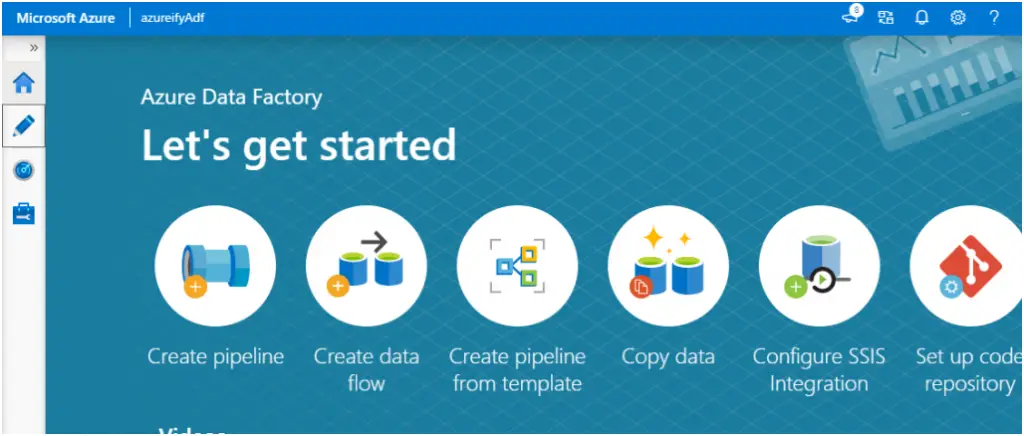

This will take you to Azure data factory wizard.

Summary : Most of the time you only need to create one adf account for a environment and then you will create multiple pipelines under the adf account. Now next is to create the linked service and dataset.